Niyati Pancholi / Sputnik Photography

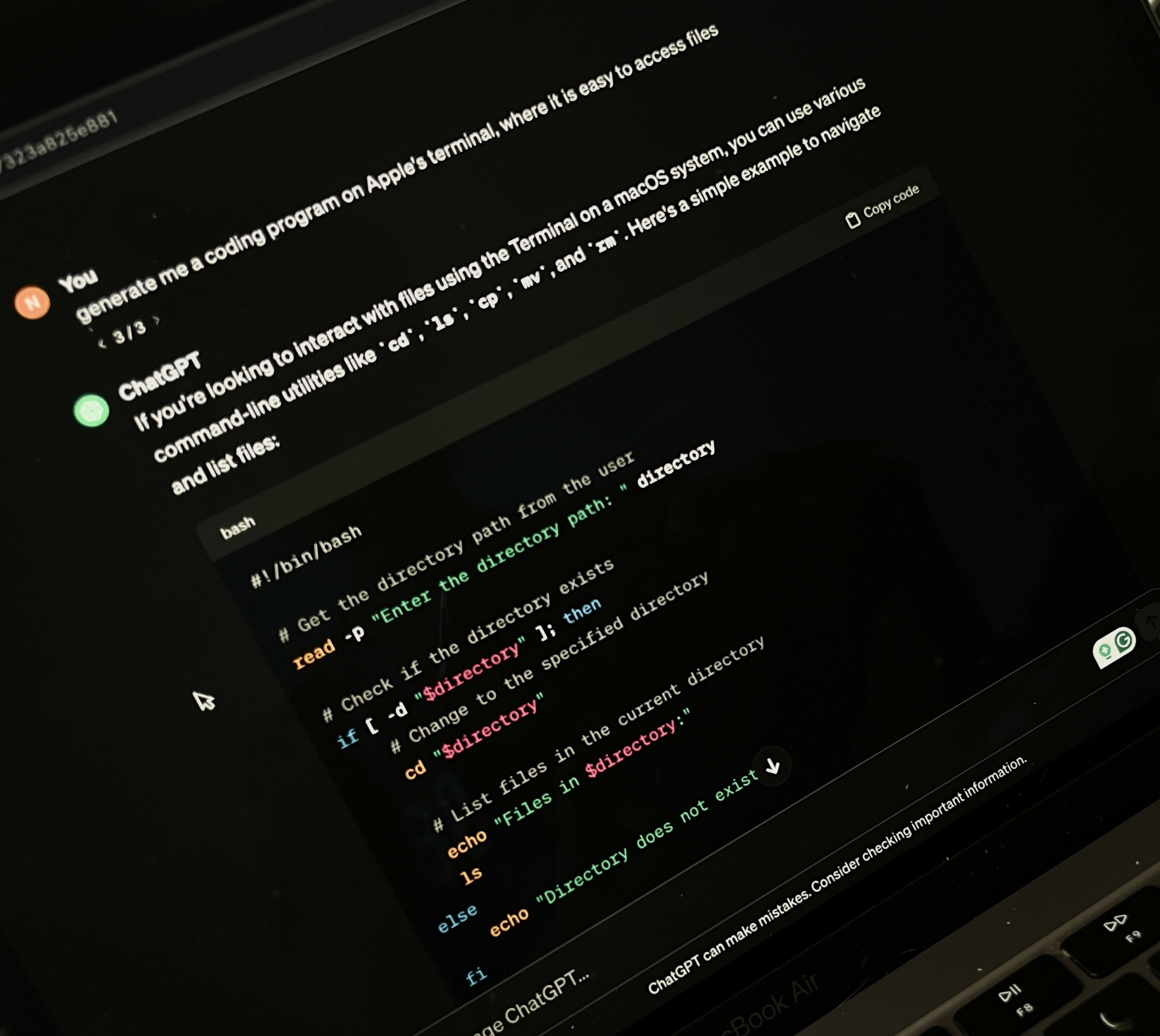

AI as a danger to security.

Jeffrey Ewert was an inmate serving two concurrent life sentences following his conviction of murder and attempted murder in 1984. In assessing his risk of reoffending, the Correctional Service of Canada used inapplicable data to help make decisions about his case.

The Correctional Service of Canada frequently uses a variety of statistical databases to make decisions in the criminal justice system, such as making determinations on bail, sentencing or conditions of sentences. A computerized legal system is not some far away futuristic fantasy. The new horizon of the legal system is one that is digitized.

In a directive, the government has stated their commitment to deploying automated decision systems will lead “to more efficient, accurate, consistent and interpretable decisions made pursuant to Canadian law.” Humans are inherently irrational beings that make unreasonable decisions every day. AI could be the solution that remedies any discrepancies or unfairness in the justice system.

Ewert is a Métis man. The assessment program used to generate his chance of reoffending was developed and tested on predominantly non-Indigenous populations with no research that substantiates that the rates were consistent when the offender was Indigenous.

AI, though efficient and accurate, is still a man-made creation. AI algorithms are designed to emulate human intelligence through human imputed data and functions. If AI is biased at the point of its creation, then it is trained to perpetuate the very biases it seeks to avoid. If predictive policing tools are developed with data which has marginalized groups overrepresented, then it is bound to reinforce patterns of racist policing practices.

Administrative justice concerns itself with how decisions are made. Decisions are only valid if they are reasonable. In the case of Canada v. Vavilov, it was outlined that reasonable decisions are made only through a “coherent and rational chain of analysis” that is “justified in relation to the facts and law” of the case. A decision that was made reasonably would feature elements of “justification, transparency and intelligibility.”

To decide if a decision was reasonable in the first place would require that the reasons for a decision are known. There is a reason why judges carefully craft long elaborate explanations for the reasoning of their decision. Decisions are meant to be scrutinized to ensure that the fundamentals principles of the justice system are being upheld and that decision makers are accountable to the public.

The same transparency does not currently exist at present for AI systems used in the legal system. Analyzing the coding, the data imputed and requiring AI to explain the reasons for their results would defeat the purposes for their introduction in the first place. That doesn’t mean that AI should be trusted blindly. It should be able to be scrutinized to the same degree as any decision maker in the justice system.

Nye Thomas, the executive director of the Law Commission of Ontario highlighted this point by saying, “Regulators, the legal profession, judges, governments, and civil society organizations will have to think about how the technology can be used most effectively and appropriately.”

The future of the world is digital. To not modernize the legal system is to ignore the current reality of the world. AI could be revolutionary in altering the legal system, but a degree of caution should be used instead of welcoming it with open arms. The true issue is not with the AI itself, but the accountability for the creation, implementation and consequences of its use.

This article was originally published in print Volume 23, Issue 7 on Thursday, March 7.